Facebook has recently introduced an AI-based feature which scans your activities for any suicidal and unhealthy thoughts, and when it finds something fishy, it alerts your friends and local first-responders for any possible risks.

To prevent suicides, the social-media company has also partnered with 80 other teams —Save.org, National Suicide Prevention Lifeline, and Forefront to name a few — to guarantee users’ safety.

The new suicide prevention tool is being tested since the start of the year. Its reviewers comprise suicide and counselling experts with specific training in handling such cases.

How it Works

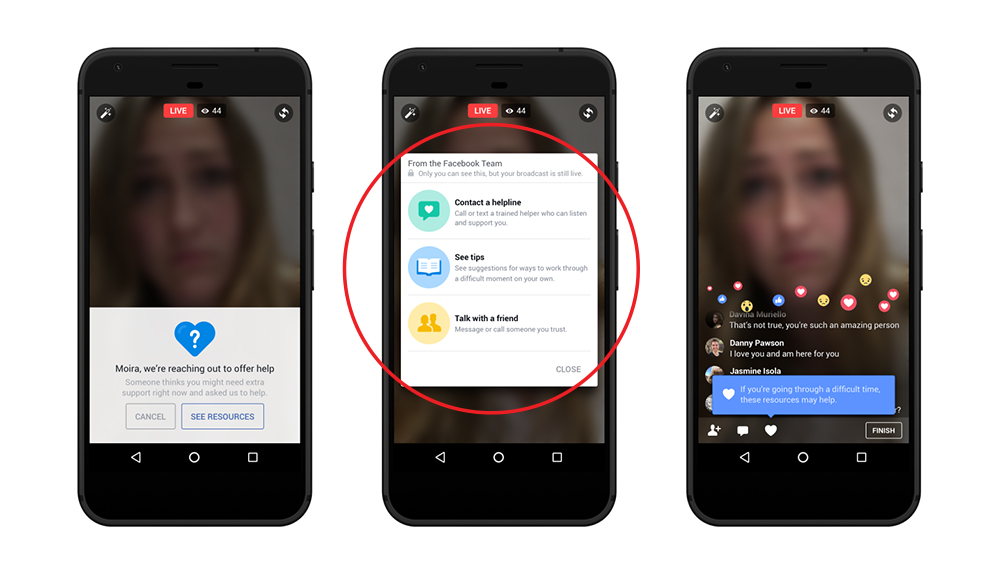

The “proactive detection” AI technology, used by Facebook for this feature, can highlight unusual activities and report the moderators for immediate and timely help. Other than keeping a check on suicidal posts, Facebook will also use AI to respond to the ‘risky user reports’ on an urgent basis and provide assistance if required.

The system also prioritizes specific posts so the ones that seem more critical get help earlier. The points in the video where more comments or reactions have been added can be identified and used. Comments such as “Are you ok?” and “Can I help?” are also strong indicators that the user in question is in need of help.

In the future, the pattern recognition will grow strong enough that it will detect nuances in speech and extend to other uses such as online bullying and hate speech. The system will be eventually rolled out to all parts of the globe (except the EU), starting from the United States.

Spying on Users?

With this addition to Facebook, some people fear that ‘proactive scanning’ of content on Facebook can sabotage their privacy too. In response the company guaranteed the users that the technology will only be used for its respective purpose.

With the help of AI, Facebook could try to prevent tragedies such as live killing and suicidal notes.

Read More: Facebook Copies Yet Another Snapchat Feature

This step by Facebook is proof that it cares for its 2 billion users and takes full responsibility for their wellness and safety.

It is up to the users now that they use this feature to report the real threats rather than blackmailing the community for personal interests.

Via TechCrunch

Best For Japanese People (Duniya Main Wahi Zaida Log Suicide Karte hai )

Unka mzhbi fareeza hy.

Hmare yahan to meera and others ki i video aisi hain jo is zamre mai aayengy