Researchers at Microsoft have reached a new milestone towards computers understanding everyday speech.

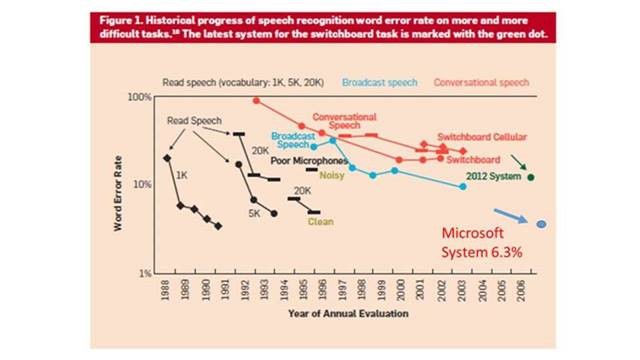

The main takeaway? Computers are getting better at understanding words that we speak. The potential for mistaking a word has gone down to 6.3% from 43% some 2 decades ago. That figure has gone down, thanks to a variety of players. But Microsoft’s latest innovation in speech recognition has narrowed the gap significantly.

Neural Networks Hold The Key To Speech Recognition

Microsoft and IBM both cite the advent of deep neural networks as the reason for the advancements in speech recognition technologies. The deep neural networks are inspired by the biological processes of a human brain and utilizes it in software form to help computers understand speech better.

Microsoft’s chief speech research scientist, Xuedong Huang, reported that by using neural networks, they have achieved a Word Error Rate (WER) of 6.3 percent. This was achieved in the industry-standard Switchboard Speech Recognition task where Microsoft’s WER was lowest compared to other speech recognition systems.

At the Interspeech, an international conference on speech communication and technology in San Francisco, IBM mentioned that it had achieved a WER of 6.9 percent. Only two decades ago the WER was as high as 43%.

How Microsoft Managed to Achieve This

These neural networks are built on several layers. Only recently Microsoft’s research team won the ImageNet computer vision challenge for their deep residual neural network which utilised a new cross-network layering system.

This coupled with the Computational Network Toolkit (CNTK) were the reason for Microsoft’s advances in the speech recognition systems. The CNTK allows the neural network algorithms to run magnitudes faster than they normally can. Another reason is the use of GPUs (Graphical Processing Units or Graphic cards in layman terms).

The GPUs are very good at parallel processing. This allows the deep neural network algorithms to run much more efficiently. This is evidenced by the fact that Cortana, Microsoft’s voice assistant, can consume 10 times more speech data thanks to using GPUs and CNTK.

Via Microsoft blog

Cortana ?

This is why Microsoft had bought SwiftKey. New SwiftKey is now also based on neural networks to predict better.