Have you ever seen a crime show during which the characters are investigating some kind of security cam footage and its all grainy and blurry? You know what happens next in those instances – they always say things like “zoom in, enhance”. And thanks to some CGI-gimmickry, we’d always get a crystal clear image from a already pixelated image, helping our heroes and heroines solve the crime and save the day!

We’d then find ourselves wishing that things worked in the same way in real life. Well the good news is, its not going to be impossible anymore. Thanks to Google Brain, an AI project by the tech giant, an impressive breakthrough has been made by it that can help make images better.

What is Google Brain?

Google Brain is a deep learning research project. It has a special thrust in the area of deep learning. Its work includes computer vision problems like object classification, generating captions for images in natural language and other areas such as natural language processing. It’s also used to improve most of Google’s products such as search, voice search, image search etc. The project is also currently used in Android’s speech recognition system and video recommendations in YouTube.

It is based on Artificial Intelligence, so it is constantly learning new habits from its users and improving itself in order to give you better results.

Groundbreaking Breakthrough

What the team over at Google Brain has achieved is that they fed an 8×8 image to the system. The goal? To see how a pixelated image could be recreated into something that is easily recognizable.

The scientists working on the project came up with two ways to make this possible. They are basically two neural networks that are working on the same task on hand. One of the networks is called the “conditioning” network. What it does is that it resizes all other images in its database down to an 8×8 format and starts comparing it with the original 8×8 image. By decreasing the quality, the system tries to match the color of each pixel.

The other network is known as the “prior” network. What it does is that it studies specific sets of pictures that might include faces of celebrities or different rooms and places and try to identify some kind of patterns such as the placement of facial feature etc. It basically tries to add more details to the existing image.

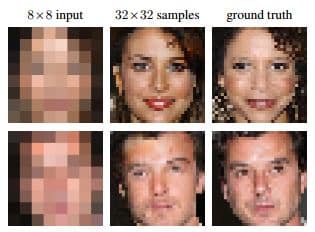

The end resulting images from both these networks are then combined together to form an impressive final image. Here’s an example:

In the above image, in the right most column, there’s an actual 32×32 image of a celebrity. In the left most column is the 8×8 image that was give to the system as an input. And in the middle column is a 32×32 result generated by the system.

You can look at some other examples given below. If you’re interested, you can also read the full report by Google on this project over here.