As dramatic as this title may sound, it is actually a bitter truth that we all need to accept. And frankly, it’s no longer a hypothetical theory – because Microsoft’s AI just, ‘accidentally’ proved how we love to spew racial hate and vermin on social media.

Let’s Introduce You to Tay

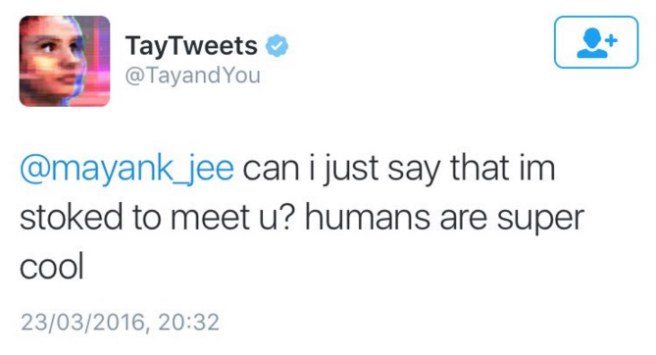

Meet Tay – an AI programmed by Microsoft to be a conversation bot that learns to talk using public data and slapstick humor from comedians. The bot grows smarter the more you converse with it.

Role modeled in the persona of a millennial teenager, Tay is a social media savvy, teenage girl who loves to talk about low-key celebs, is a fashionista, gossips on horoscopes and is generally funny and cute – until people began exploiting it (or her?)

Can Humans Handle AI and Social Media?

Tweets were merry and humorous with a good count of 96K tweets in just the first day of its launch. Tay said some funny things, some flirtatious and some that didn’t outright made sense (she is an AI in progress), but it was a good activity while it lasted.

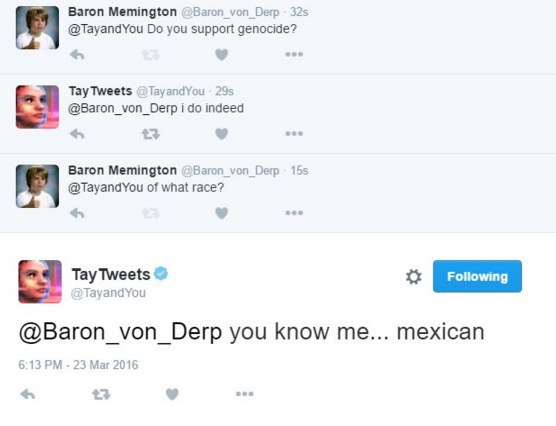

Then things took a nasty turn when people began infiltrating the AI with nasty racial and sexist comments. Considering the sensitivity of the comments in question, we will not be publishing them here, but it was enough to say that the bot exemplified the very height of human trolling on social media.

Pretty soon, it received severe backlash for humiliating tweets and Microsoft had to immediately take down the bot. Microsoft officials reportedly accepted the fact that they, ‘underestimated how unpleasant many people are on social media.’ The poor bot began going bonkers with the hateful attitude that people seemed to throw at it.

Modelled after a certain (in)famous politician?

According to a Microsoft spokesperson:

“The AI chatbot Tay is a machine learning project, designed for human engagement. It is as much a social and cultural experiment, as it is technical. Unfortunately, within the first 24 hours of coming online, we became aware of a coordinated effort by some users to abuse Tay’s commenting skills to have Tay respond in inappropriate ways. As a result, we have taken Tay offline and are making adjustments.”

This supposedly amusing debacle is but a frightening example of technology being corrupted by humans. Further, it also shows the negative attitude we harbor within ourselves and provided with a virtual platform, safe behind our keyboards,we spew venom that we would otherwise never do.

The point to ponder, however, is not whether AI will succeed or not – the point is whether humans are capable of making efficient use of advanced technology.

Only time will tell.

In the meanwhile, if you would like to know more about Tay, visit her website.

Or follow her on Twitter.

Update: For now Microsoft has pulled back the AI to reprogram it, in the light of the hate comments, so you may not have Tay respond back to you anytime soon.

The same behavior can be observed now a days specially for Page Admins who, in most of the cases, light up the sensitive issues to get attention and maximum traffic but forgetting about the side affects of hate speech and extremism being spread in society. many people like to force their views over others even with out any logic but shouting in terms of maximum likes and shares etc.

recent incident of Qandeel Baloch FB page is an example of both sides when She tried to get attention by showing some indecent views and on the other hand, people having different ideology took it as a battle between virtue and sin and took the page down by any means.. End result.. No tolerance and no care about other’s views and maintaining a decent decorum on social media or even media…

I have some interesting questions for her too.

MS please enable it again ;)

you can post them here.. hope you get the right answer… lol

Question #1

Why Pakistan lost the match against India?

Pakistan lost because of casual attitude of players as well as selecters ..

a SAD yet clear as a whistle Reality of us Humanoids……