In a groundbreaking development, Anthropic’s cutting-edge artificial intelligence model, Claude 3 Opus, has seized the coveted position at the helm of the Chatbot Arena leaderboard. This triumph marks a significant shift in the landscape, relegating OpenAI’s GPT-4 to the runner-up position for the first time since its inception last year.

Diverging from traditional methods of benchmarking AI models, the LMSYS Chatbot Arena adopts a unique approach, emphasizing human judgment. Participants are tasked with assessing and ranking the responses generated by two distinct models when presented with identical prompts in a blind test.

This benchmark has been ruled by OpenAI’s GPT 4 for so long that any other AI model that comes close is named “GPT 4 class”, which is why this is such a noteworthy achievement for Claude 3.

However, even though Claude beats GPT 4 in these results, it is worth mentioning that the score between the two models is very close. Claude 3 may not be able to hold this position for too long as GPT 4.5 is expected to be released very soon.

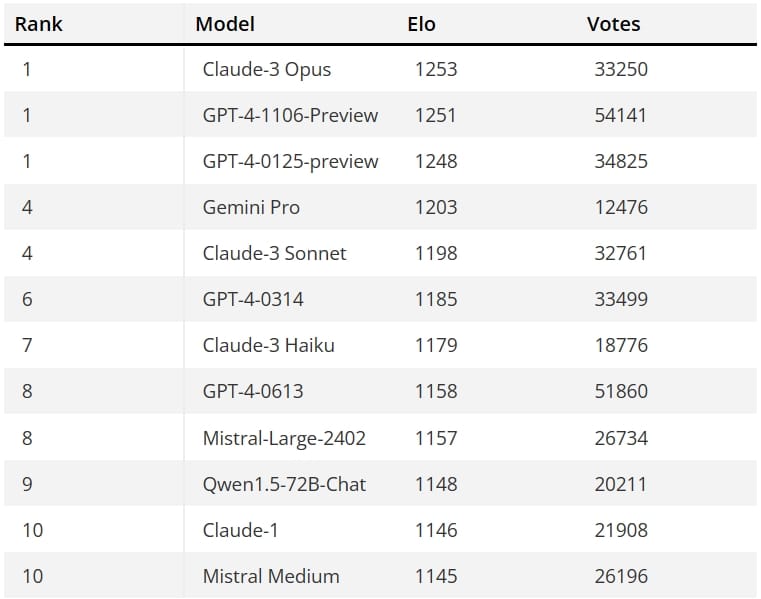

Operated by the Large Model Systems Organization (LMSys), the Chatbot Arena hosts a diverse array of large language models engaging in anonymous randomized battles. The benchmark has collected over 400,000 votes from users ever since its launch last year. These results ranked OpenAI, Google, and Anthropic’s AI models in the top 10 list ever since. But recently, some open-source models such as Mistral’s and Alibaba’s products have appeared in the top spots too.

This benchmark uses the Elo system, which is commonly used in e-sports games and chess, to calculate the skill level of participants. Except in this case, the participants are not humans using the chatbot, but the AI models behind these chatbots.

Claude 3 Opus, the biggest model in the Claude 3 family, took the top spot in the leaderboard with over 70,000 new votes. The best part is that even the smaller Claude 3 models performed well. Claude 3 Haiku is the smallest model in the series, meant to run on consumer devices similar to Google’s Gemini Nano. It is achieving impressive results without being significantly large like GPT 4 or Claude Opus.

All three Claude models managed to take the top 10 rankings in these benchmarks. Opus was at the top, Sonnet took the fourth spot alongside Gemini Pro, and Haiku was at sixth with an older version of GPT 4.