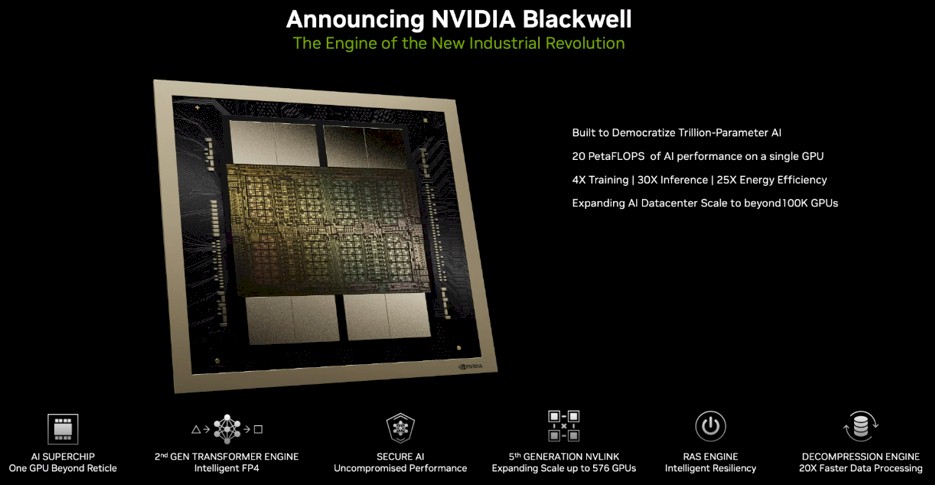

Nvidia has lifted the curtain on its latest iteration of graphics processing units (GPUs) for AI training dubbed the Blackwell series. These cutting-edge GPUs tout a staggering 25x improvement in energy efficiency, offering reduced costs for AI processing tasks.

At the forefront of this release is the Nvidia GB200 Grace Blackwell Superchip, engineered with multiple chips within the same package. This new architecture pledges remarkable performance enhancements, boasting up to a 30x increase in performance for LLM inference workloads when compared to its predecessors.

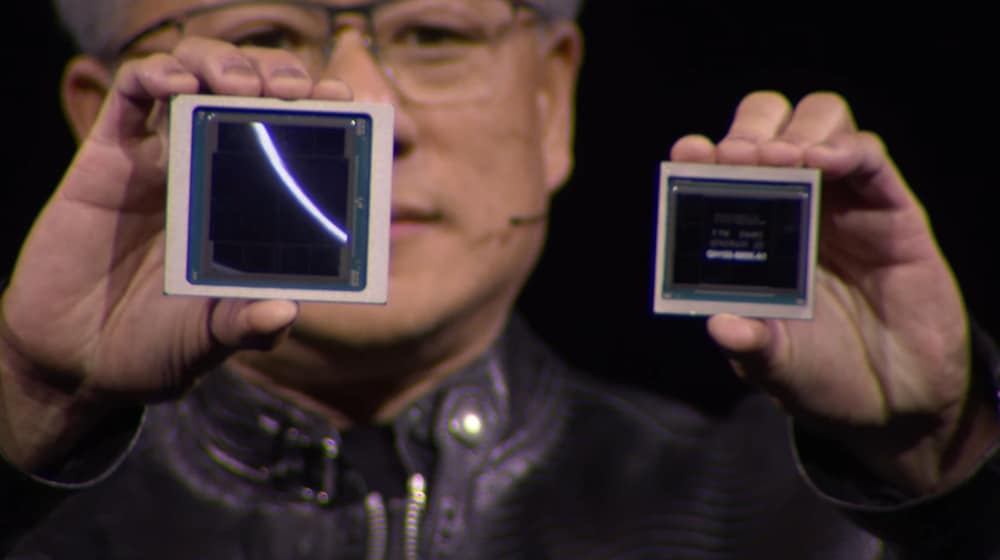

Addressing an audience of thousands of engineers at Nvidia GTC 2024 during a keynote presentation, Nvidia CEO Jensen Huang unveiled the Blackwell series, heralding it as the harbinger of a transformative era in computing. While gaming products are expected to follow, Huang’s focus was squarely on the technological advancements driving this new generation.

In a lighthearted moment during the keynote, Huang humorously remarked about the staggering value of the prototypes he held, jesting that they were worth $10 billion and $5 billion respectively.

Nvidia claims that Blackwell-based supercomputers will allow organizations around the globe to enable large language models (LLMs) based on tens of trillions of parameters which will bring real-time generative AI processing, all while reducing costs and power consumption by 25x.

This enhanced processing capability will seamlessly scale to accommodate AI models with up to 10 trillion parameters, ushering in a new era of efficiency and productivity in AI-driven tasks.

Other than generative AI processing, these new Blackwell AI GPUs, named after the mathematician David Harold Blackwell, will also be used in data processing, engineering simulation, electronic design automation, computer-aided drug design, and quantum computing.

Technical specifications include 208 billion transistors and TSMC’s 4NP manufacturing process which is based on a custom-built, two-reticle limit that brings significant amounts of processing power.

NVLink facilitates a bidirectional throughput of 1.8TB/s per GPU, enabling uninterrupted high-speed communication across a network of up to 576 GPUs, ideal for handling today’s most intricate Large Language Models (LLMs).

At the heart of this innovation lies the NVIDIA GB200 NVL72, a rack-scale system engineered to deliver a staggering 1.4 exaflops of AI performance and equipped with 30TB of fast memory.

The GB200 Superchip represents a significant advancement, offering up to a 30-fold increase in performance compared to the Nvidia H100 Tensor Core GPU for LLM inference workloads, while concurrently slashing costs and energy consumption by up to 25 times.

Cisco, Dell Technologies, Hewlett Packard Enterprise, Lenovo, and Supermicro are poised to introduce a diverse array of servers leveraging Blackwell products. Additionally, Aivres, ASRock Rack, ASUS, Eviden, Foxconn, Gigabyte, Inventec, Pegatron, QCT, Wistron, Wiwynn, and ZT Systems are also expected to join in delivering servers equipped with Blackwell-based technology.

Anticipated to be embraced by major cloud providers, server manufacturers, and prominent AI companies such as Amazon, Google, Meta, Microsoft, and OpenAI, the Blackwell platform is positioned to spearhead a paradigm shift in computing across various industries.